This continues the reprisal my series of page 1 critiques - you can read about the project HERE, and there's a list of all the critiques so far too.

I'm also posting some of these on my Youtube channel (like, subscribe yadda yadda).

I turn to another of my favorite books from the past 10 years: Strange the Dreamer, by Laini Taylor.

I have reviewed the book.

First of all I'm going to cut and paste the disclaimers, and anyone prone to outrage really should read them:

It's very hard to separate one's tastes from a technical critique. There are page 1s from popular books with which I would find multiple faults. I didn't, for example, like page 1 of Terry Goodkind's Wizard's First Rule (I didn't pursue the rest of the book). But that book has 150,000+ ratings on Goodreads, a great average score of 4.12 and Goodkind is a #1 NYT bestseller. His first page clearly did a great job for many people.

I'm not always right *hushed gasp*. You will likely be able to find a successful and highly respected author who will tell you the opposite to practically every bit of advice I give. Possibly not the same author in each case though.

The art of receiving criticism is to take what's useful to you and discard the rest. You need sufficient confidence in your own vision/voice such that whilst criticism may cause you to adjust course you're not about to do a U-turn for anyone. If you act on every bit of advice you'll get crit-burn, your story will be pulled in different directions by different people. It will stop being yours and turn into some Frankenstein's monster that nobody will ever want to read.

Additionally - don't get hurt or look for revenge. The person critiquing you is almost always trying to help you (it's true in some groups there will be the occasional person who is jealous/mean/misguided but that's the exception, not the rule). That person has put in effort on your behalf. If they don't like your prose it's not personal - they didn't just slap your baby.

I've flicked through some of the pages looking for one where I have something to say - something that hopefully is useful to the author and to anyone else reading the post.

I've posted the unadulterated page first then again with comments inset and at the end.

----------------------------------------------------------------------------------------------------------------

MYSTERIES OF WEEP

Names may be lost or forgotten. No one knew that better than Lazlo Strange. He'd had another name first, but it had died like a song with no one left to sing it. Maybe it had been an old family name, burnished by generations of use. Maybe it had been given to him by someone who loved him. He liked to think so, but he had no idea. All he had were Lazlo and Strange—Strange because that was the surname given to all foundlings in the Kingdom of Zosma, and Lazlo after a monk's tongueless uncle.

"He had it cut out on a prison galley," Brother Argos told him when he was old enough to understand. "He was an eerie silent man, and you were an eerie silent babe, so it came to me: Lazlo. I had to name so many babies that year I went with whatever popped into my head." He added, as an afterthought, "Didn't think you'd live anyway."

That was the year Zosma sank to its knees and bled great gouts of men into a war about nothing. The war, of course, did not content itself with soldiers. Fields were burned; villages, pillaged. Bands of displaced peasants roamed the razed countryside, fighting the crows for gleanings. So many died that the tumbrils used to cart thieves to the gallows were repurposed to carry orphans to the monasteries and convents. They arrived like shipments of lambs, to hear the monks tell it, and with no more knowledge of their provenance than lambs, either. Some were old enough to know their names at least, but Lazlo was just a baby, and an ill one, no less.

"Gray as rain, you were," Brother Argos said. "Thought sure you'd die, but you ate and you slept and your color came normal in time. Never cried, never once, and that was unnatural, but we liked you for it fine. None of us became monks to be nursemaids."

To which the child Lazlo replied, with fire in his soul, "And none of us became children to be orphans."

But an orphan he was, and a Strange, and though he was prone to fantasy, he never had any delusions about that. Even as a little boy, he understood that there would be no revelations. No one was coming for him, and he would never know his own true name.

Which is perhaps why the mystery of Weep captured him so completely.

--------------------------------------------------------------------------------------------------------------

It's worth noting, as I did for Senlin Ascends, that just because I think this is a great book, it doesn't necessarily follow that it has a great page 1, any more than it means it has a great cover(*).

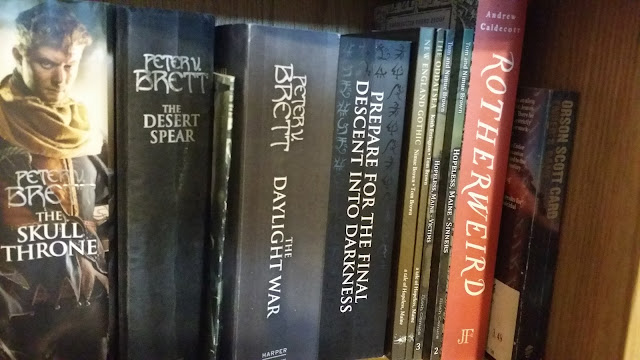

(*) My copy has this cover

... it's OK.

... it's OK.

.

MYSTERIES OF WEEP

What do we think of chapter titles? Me, I'm not a big fan, but not opposed to them either. This one is fine.

Names may be lost or forgotten. No one knew that better than Lazlo Strange. He'd had another name first, but it had died like a song with no one left to sing it.

As a first line ... pretty neutral, but as a first 3 lines (all short) it's good. We immediately have a character to focus on. This isn't disembodied chat, we're not staring at the mountains or describing the weather. There's a person, and he has an interesting name. The most important thing is that we immediately know we're in the hands of an author who wields words with skill. His name had died like a song with no one left to sing it. That's non-standard use of the language - that's a line reaching toward poetry. This is a writer who understands the power of writing on the small scale and has declared her intention to do just that for us.

Maybe someone would call that purple or flowery. They'd be wrong (in as far as anyone can be in a subjective judgement) this is on point, a direct hit on my taste centre. Purple or over-flowery language certainly can be used by someone attempting this sort of impact. Often people who try this fail painfully and the result is a cringe to read - though again, tastes vary and there will be readers who eat up the purplist of purple. Anyway - onwards!

Maybe it had been an old family name, burnished by generations of use.

Immediately we're hit by another fine line. A name burnished by generations of use. It's a small thing, but applying the familiar concept of burnished by use to something intangible, like a name, rather than an object, is just a nice linguistic step. Taylor is brave enough to colour outside the lines, and skilled enough to make it look good.

Maybe it had been given to him by someone who loved him. He liked to think so, but he had no idea. All he had were Lazlo and Strange—Strange because that was the surname given to all foundlings in the Kingdom of Zosma, and Lazlo after a monk's tongueless uncle. "He had it cut out on a prison galley," Brother Argos told him when he was old enough to understand. "He was an eerie silent man, and you were an eerie silent babe, so it came to me: Lazlo. I had to name so many babies that year I went with whatever popped into my head." He added, as an afterthought, "Didn't think you'd live anyway."

A question has been posed, and we're speculating on it. We have a tiny bit of general world building (Zosma) and a nice bit of that pin-point detail that I'm always encouraging you to use. Will the fact that the monk's uncle was tongueless ever be important, or even mentioned again? (Spoiler: No & I don't think so.) But the fact that we get this interesting, useless, very specific detail makes it all seem more real, less generic, it grounds us and adds colour.

That was the year Zosma sank to its knees and bled great gouts of men into a war about nothing.

That, right there, is an excellent line. Now you know that you're in for a verbal treat. I might have tweaked it slightly and opened with that, but here is fine too.

The war, of course, did not content itself with soldiers. Fields were burned; villages, pillaged. Bands of displaced peasants roamed the razed countryside, fighting the crows for gleanings. So many died that the tumbrils used to cart thieves to the gallows were repurposed to carry orphans to the monasteries and convents. They arrived like shipments of lambs, to hear the monks tell it, and with no more knowledge of their provenance than lambs, either. Some were old enough to know their names at least, but Lazlo was just a baby, and an ill one, no less.

Worldbuilding wrapped around great imagery. And all of it directly pertinent to the character, our focus, our question.

"Gray as rain, you were," Brother Argos said. "Thought sure you'd die, but you ate and you slept and your color came normal in time. Never cried, never once, and that was unnatural, but we liked you for it fine. None of us became monks to be nursemaids."

I say that description should illuminate the observer as well as the object. Here Lazlo is the object, and in having him described by the monk we're learning about the monk's character too. He seems to be a no-nonsense, practical man, his compassion delivered sparingly.

To which the child Lazlo replied, with fire in his soul, "And none of us became children to be orphans."

Lazlo's first words inject character into him. That's good.

But an orphan he was, and a Strange, and though he was prone to fantasy, he never had any delusions about that. Even as a little boy, he understood that there would be no revelations. No one was coming for him, and he would never know his own true name.

Which is perhaps why the mystery of Weep captured him so completely.

And by the foot of page 1 we're returned to the chapter title, the question that has hovered over all these words. So, encouraging us to turn the page we have the super-high quality of the prose, the question of Lazlo Strange's origin and destination, both seeming uncertain, and the mystery of Weep which has captured our main character and which I immediately want to know about too. It sounds intriguing in and of itself - what kind of a name is Weep?

++++

So, how was it as a first page? Very good, I thought. It's a tour de force of great prose, it brings two characters to life with minimal space, deploying some dialogue to great effect, and it presents us with both problems and questions.

The problem is, admittedly a general situational one - an orphan in a war-torn world, but still, our guy isn't safe, it sounds dangerous and precarious. And the questions are specific: what is this mystery? what's Weep? and general: who is this strange little boy that the book is named for?

There's not much room on page 1, not much time to hook a reader, but Taylor's done it as far as I'm concerned. And the promises of quality and of intrigue that she makes here are fulfilled in spades. Both this book and the one that completes the duology are brilliant, full of imagination and emotion. Read 'em!